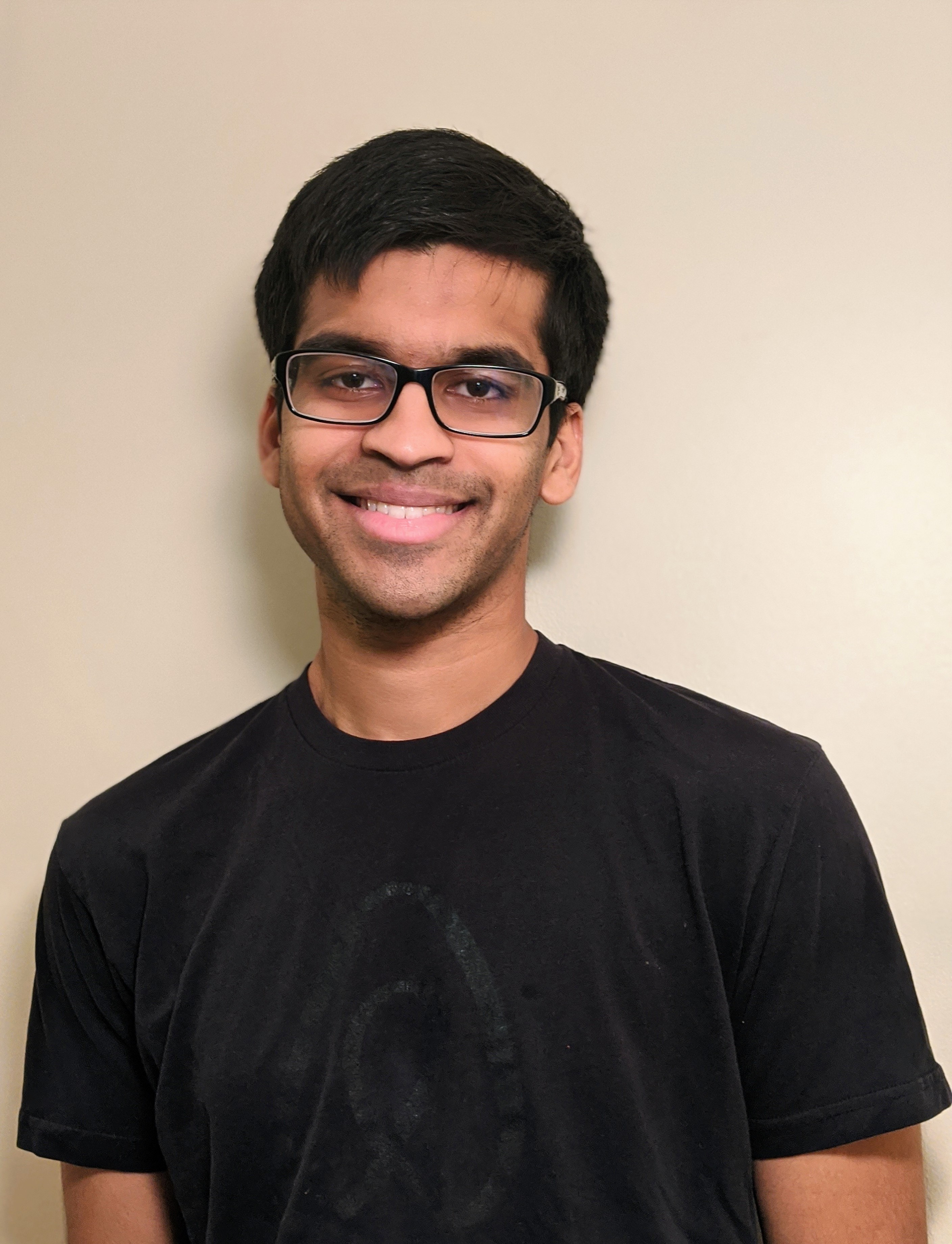

Rohan Chitnis

I am an AI Research Scientist working on applied reinforcement learning and signal processing at Meta Reality Labs. Until May 2022, I was a PhD student in MIT EECS, within CSAIL's Learning and Intelligent Systems group. In May 2018, I received an MS in EECS from MIT. In May 2016, I received a BS in EECS, with Highest Honors, from UC Berkeley.

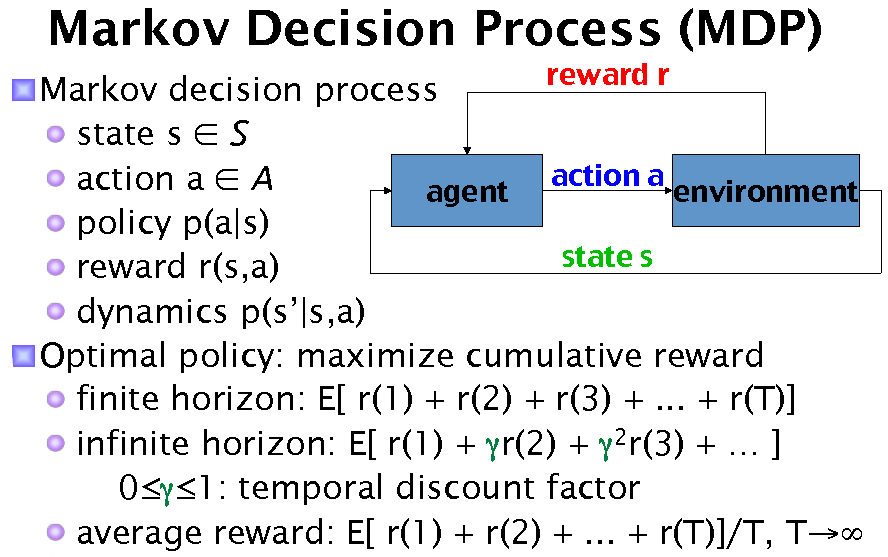

My PhD work was in the field of artificial intelligence for robotics. It focused on integrated planning and machine learning for solving complicated, long-horizon robotic tasks. In particular, I thought extensively about abstraction and representation learning: how can we learn task-specific representations that are suitable for solving a particular problem at hand? What aspects of the world can safely be ignored, or treated holistically? How can we turn challenging, underspecified robotic tasks into smaller, tractable problems? Please check out my publications or the blog post referenced below if you would like to learn more.

I have enjoyed being a Teaching Assistant numerous times throughout my academic career.

News:

My team's work at Meta has been featured in a Nature publication. For this paper, I trained the models used in the wrist task, establishing state-of-the-art wrist-based continuous control with a surface electromyography (sEMG) device.

I am co-organizing a AAAI 2024 Spring Symposium on User-Aligned Assessment of Adaptive AI Systems at Stanford University from March 25-27, 2024.

I am co-organizing the RSS 2023 Workshop on Learning for Task and Motion Planning at Daegu, Korea on July 10, 2023.

I am co-organizing the CoRL 2022 Workshop on Learning, Perception, and Abstraction for Long-Horizon Planning at Auckland, New Zealand on December 15, 2022.

In August 2022, I started a full-time AI Research Scientist position at Meta Reality Labs.

I defended my PhD thesis on April 25, 2022, and graduated in May 2022.

In Summer 2021, I interned at Nuro, on the Planning team. I worked on integrated data-driven and rule-based reasoning to improve the behavior stack of Nuro's passenger-less autonomous vehicles!

In March 2021, I co-authored a blog post overviewing some of my recent research. Feel free to check it out, and let me know if you have any questions or comments!

My paper "CAMPs: Learning Context-Specific Abstractions for Efficient Planning in Factored MDPs" was accepted for a plenary talk (top 12% of accepted papers) at the 2020 Conference on Robot Learning.

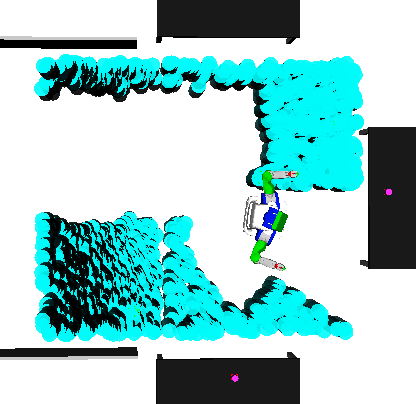

In Summer 2019, I did a research internship with Abhinav Gupta at Facebook AI Research, in the new Pittsburgh office for robotics. I worked on formulations of intrinsic motivation for learning synergistic behavior via deep reinforcement learning. We were able to get robots to perform bimanual manipulation tasks both in simulation and on real Sawyer arms.

My paper "Integrating Human-Provided Information Into Belief State Representation Using Dynamic Factorization" won Best Paper Award at the 2018 International Workshop on Statistical Relational AI.

In Summer 2017, I did a research internship with Sergey Levine at Google Brain Robotics, working on methods for improving exploration in deep reinforcement learning.

In Summer 2016, I interned as a software engineer at Airbnb, doing machine learning with the Search Ranking team.

In March 2016, I was awarded an NSF GRFP Fellowship (accepted) and an NDSEG Fellowship (declined).

In January 2016, I was selected as one of 40 Finalists for the Hertz Fellowship, a highly reputable fellowship for student researchers in the physical, biological, and engineering sciences.

In December 2015, I was selected as the Runner-up for the 2016 Computing Research Association (CRA) Outstanding Undergraduate Researcher Award (Male, PhD-granting institution).